The web protocols that quietly power every React or mobile app carry decades of engineering trade-offs — from TCP ordering to QUIC stream independence.

This article explores that evolution in depth: how HTTP/1.1, 2, and 3 differ, what they mean for back-end and front-end developers, and how platforms like Nginx, Vercel, and Cloudflare bring it all together.

Settle in — this article is not a quick read, but it gathers the detail that turns “black-box performance” into predictable engineering.

When your React or React Native app loads, hundreds of packets race across networks governed by invisible rules — HTTP versions. Each upgrade — from HTTP/1.1 to HTTP/2 to HTTP/3 — reshaped how browsers, servers, and frameworks communicate.

Understanding these layers helps developers build faster, safer, and more resilient applications.

Why Protocol Versions Still Matter

Modern frameworks (Next.js, Remix, Astro, Expo) handle a lot for you, but protocol behavior still affects:

Latency on first load and during navigations

Tail performance (p95/p99) under packet loss or mobile transitions

Concurrency of module, font, image, API, and streaming fetches

Security posture (TLS 1.3, replay risks, Zero‑Trust hops)

Observability and capacity planning

Layer Refresher (Mental Model)

Application Layer: Defines HTTP request/response meaning (methods, headers, bodies) and stays stateless per request.

Transport Layer: Moves bytes reliably (TCP) or via independent encrypted streams (QUIC over UDP).

Encryption: TLS 1.2/1.3 secures data above TCP; QUIC bakes TLS 1.3 directly into transport.

Multiplexing: Multiple logical streams share one connection (HTTP/2 on TCP; HTTP/3 on QUIC without cross-stream blocking).

Analogy:

HTTP defines the message.

TCP insists every letter arrive strictly in order.

QUIC lets each envelope arrive independently—no one waits for the slowest.

HTTP/1.1 — in a modern web environment

HTTP/1.1 powered the early web. It introduced

persistent connections

Chunked transfer encoding

Basic caching semantics enhancements

but every request still traveled over a single, ordered TCP stream.

When packet #5 was delayed, packets #6–#100 waited behind it.

This created one of the web’s earliest bottlenecks: Head-of-Line Blocking (HOLB).

Deep Dive: HTTP is Stateless, but HOLB Isn’t

A common question is:

“If HTTP is stateless, why does one delayed packet stall everything?”

Here’s the distinction:

HTTP lives at the application layer — defining what data is exchanged (

GET,POST, headers, body). It’s statelessbecause each request carries all needed context and doesn’t depend on earlier ones.HOLB lives at the transport layer (TCP) — which guarantees ordered delivery of packets. If a packet is lost, the entire stream waits until it’s retransmitted.

The transport layer decides how data travels — handling delivery, ordering, and error correction between endpoints.

So while HTTP doesn’t maintain conversation state, the underlying TCP connection does maintain ordering state. That’s what causes global delays.

HTTP/2 — Multiplexing and Compression, But Transport HOLB Remains

HTTP/2 reimagined how data flows over the same TCP base, bringing four key upgrades:

Binary framing — Requests/responses are split into structured frames for faster parsing.

Multiplexing — Multiple requests share one connection via independent streams.

HPACK header compression — Repeated headers like cookies are encoded as dictionary references.

Server Push(Deprecated in Practice) — Servers can pre-send assets they expect the client would need.

What Improved:

Eliminated application-layer HOLB (no strict request sequencing).

Fewer TLS handshakes; better use of a single congestion window.

Significant header byte reduction for chatty APIs or GraphQL endpoints.

What Didn’t:

Transport-level HOLB remains. One lost TCP packet still stalls delivery of frames for all streams sharing that TCP connection until retransmission completes.

Why Server Push Fell Away:

Complexity in correctness (over-pushing/waste).

Hard to cache predictably.

Browsers (e.g., Chrome, Firefox) disabled or deprecated it. Use instead:

rel=preload,rel=preconnect, HTTP 103 Early Hints.

🔐 TLS 1.3, ALPN, and Edge Termination

Modern HTTP effectively means HTTPS.

TLS 1.3:

Fewer round trips (1-RTT; 0-RTT possible on resumption).

Forward secrecy enforced.

Cleaner cipher suite landscape.

ALPN (Application-Layer Protocol Negotiation):

Client offers:

h2, h3, http/1.1.Server picks the highest mutually supported (e.g.,

h2orh3).

TLS Termination at the Edge

On platforms like Vercel, Cloudflare, and Netlify, TLS usually terminates at their edge:

Browser ↔ Edge: Encrypted (TLS 1.3).

Edge ↔ Your Backend: Often re-encrypted with HTTPS, sometimes proxied via HTTP for internal performance.

Frameworks such as Express or Django must use trust proxy headers (

X-Forwarded-Proto,Host,For) to interpret the original connection as secure.

Vercel note:

Serverless functions on Vercel run within the same TLS-terminated environment. You don’t manage certificates — the edge fabric handles TLS 1.3 automatically.

For self-hosting, Nginx or Caddy can terminate TLS and optionally re-encrypt upstream traffic using HTTPS or mTLS — aligning with Zero-Trust principles (“verify and encrypt every hop”).

HTTP/3 — QUIC: Stream Independence & Mobility

QUIC(RFC 9000) stands for Quick UDP Internet Connections — a transport protocol that blends reliability, encryption, and parallelism on top of UDP.

Key Concepts

0-RTT (Zero Round-Trip Time): Clients reuse keys from previous sessions to send data immediately without a handshake. Great for mobile users reconnecting frequently.

Stream Independence: Each stream has its own packet numbering and flow control. Packet loss affects only that stream — others continue unaffected.

Connection Migration: Sessions are tied to a connection ID rather than an IP address. If a device switches from Wi-Fi to 4G, the same connection continues.

Built-in TLS 1.3: Encryption is mandatory and integrated into the transport layer.

Header Compression: QPACK (not HPACK). Designed to avoid introducing head-of-line blocking during header table synchronization in a loss scenario.

Performance Gains:

Improved tail latency on lossy, mobile, or high-jitter networks.

Faster recovery from packet loss; unaffected streams continue.

Reduced handshake overhead (esp. with resumption).

Adoption:

Major CDNs and browsers widely support HTTP/3.

gRPC over HTTP/3, and is supported by some of the languages

Go / Rust: Strong HTTP/3 + QUIC libraries.

Node.js: Experimental / progressing; see Node.js issue #57281.

Python: User-space libs (

aioquic) or via edge proxies (Caddy, Cloudflare) rather than stdlib.Java / .NET: HTTP/3 adoption moving through library layers (Netty, Jetty, Kestrel updates).,

Practical Impact by Role

Frontend / Web App Engineers

Don’t over-bundle solely to reduce request count (HTTP/2+ handles concurrency). Still bundle for code splitting strategy and caching boundaries.

Use

rel=preload(critical CSS, fonts),rel=preconnect(third-party origins), andrel=dns-prefetch.Prefer Early Hints (103) when supported to prime fetches earlier.

Eliminate bloat: large cookies and verbose headers consume compression tables.

Measure p95 Resource Timing vs just “Time to First Byte.”

React Native / Mobile Clients

HTTP/3 improves resilience when radios churn (Wi‑Fi ↔ 4G).

Use keep-alive pools carefully; minimize forced connection churn.

Monitor tail API latencies under network transitions (simulate with network link conditioners).

Backend / API Engineers

Reduce header entropy (avoid ever-growing dynamic data in headers) to maximize HPACK/QPACK efficiency.

Validate proxies preserve

:authorityand correct scheme for downstream auth logic.Tune HTTP/2 max concurrent streams and flow windows if using high-volume streaming (gRPC).

Infra / Platform

Roll out HTTP/3 via CDN toggle first—low risk.

Track metrics: loss %, retransmissions, handshake times, p95 vs p99 request latency segments.

Consider mTLS for internal re-encryption; align with Zero-Trust.

Use Alt-Svc headers to advertise HTTP/3 (

Alt-Svc: h3=”:443”; ma=86400).

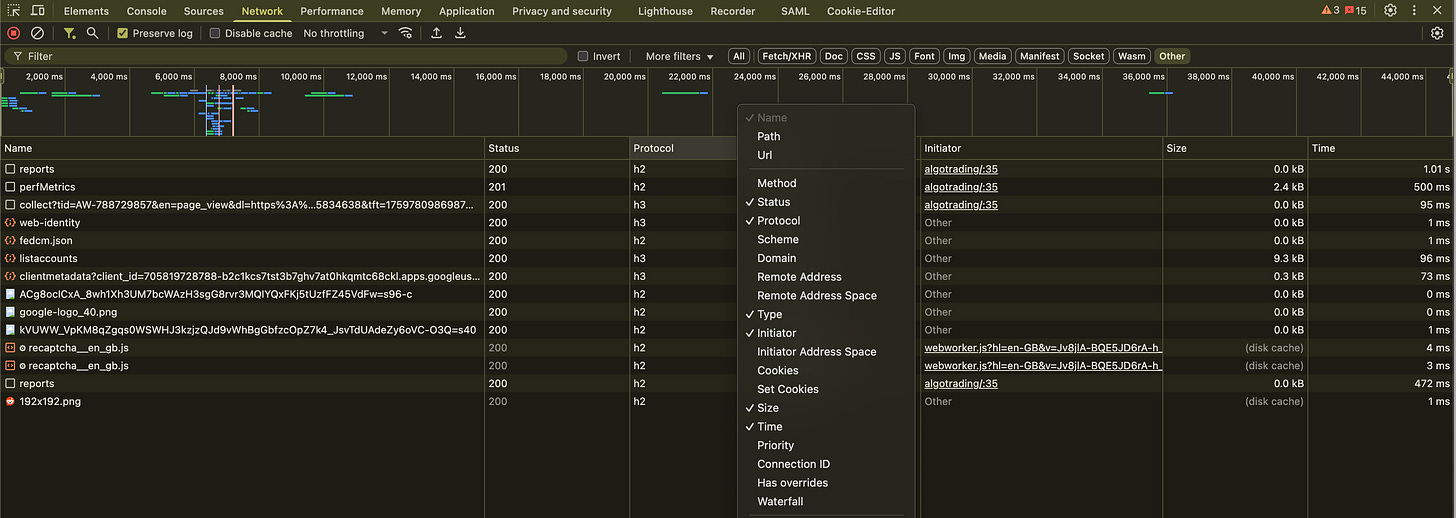

How to Inspect Which Protocol You’re Using

Browser DevTools:

Open Network panel > right-click column header > enable “Protocol”.

Reload — look for

h2,h3, orhttp/1.1

# Force HTTP/2

curl -I --http2 https://example.com

# Attempt HTTP/3 (curl must be built with nghttp3/quiche)

curl -I --http3 https://example.com

# Detailed HTTP/2 framing

nghttp -ans https://example.com

# QUIC packet-level trace (advanced)

sudo qlogctl … (platform-specific) Minimal Nginx Config Examples

HTTP/2 Enablement (production-stable):

server {

listen 443 ssl http2;

server_name example.com;

ssl_certificate /etc/ssl/fullchain.pem;

ssl_certificate_key /etc/ssl/privkey.pem;

location / {

root /var/www/html;

index index.html;

}

}HTTP/3 (QUIC) Example (requires nginx-quic or alternative like Caddy):

server {

listen 443 ssl http2;

listen 443 quic reuseport;

server_name example.com;

ssl_certificate /etc/ssl/fullchain.pem;

ssl_certificate_key /etc/ssl/privkey.pem;

add_header Alt-Svc ‘h3=”:443”; ma=86400’;

location / {

root /var/www/html;

index index.html;

}

}Note: Mainline Nginx may not yet include native QUIC in all distributions—verify your build.

Closing Thought

Every upgrade of HTTP rewired how the web feels.

HTTP/2 gave us speed through multiplexing.

HTTP/3 gave us resilience through QUIC.

TLS 1.3 and Zero-Trust made every hop secure by design.

We don’t code the protocol —

But it decides how fast, safe, and seamless our code feels to the world.If you want to learn above detail, as part of mentoring conversation reach back to us at prabodh